When Money Attacks... Arcade games!

Here is Lord of Vermilion in action:

Lord of Vermilion is a touch arcade game from Square/Enix.

The Japanese commercial (where they advertise who the illustrators are; LOL):

Monday, February 18, 2008

Saturday, February 16, 2008

TMX Elmo - Fully Automated Elmo Toy (and Cookie Monster)

This Elmo laughs so hard that he falls down, and then he gets back up.

When Money Attacks... Sesame Street.

Here it is with a French Elmo and with the Cookie Monster:

And here a Shizzu dog faces off against the Elmo:

When Money Attacks... Sesame Street.

Here it is with a French Elmo and with the Cookie Monster:

And here a Shizzu dog faces off against the Elmo:

Labels:

Inventions - Silly,

Technology - Robotics

Friday, February 15, 2008

The History of Multi-touch

When money attacks... multi-touch technology!!!

The history of Multi-touch:

http://www.billbuxton.com/multitouchOverview.html

An Incomplete Roughly Annotated Chronology of Multi-Touch and Related Work

In the beginning ....: Typing & N-Key Rollover (IBM and others).

While it may seem a long way from multi-touch screens, the story of multi-touch starts with keyboards.

Yes they are mechanical devices, "hard" rather than "soft" machines. But they do involve multi-touch of a sort.

First, most obviously, we see sequences, such as the SHIFT, Control, Fn or ALT keys in combination with others. These are cases where we want multi-touch.

Second, there are the cases of unintentional, but inevitable, multiple simultaneous key presses which we want to make proper sense of, the so-called question of n-key rollover (where you push the next key before releasing the previous one).

Photo Credit

1982: Flexible Machine Interface (Nimish Mehta , University of Toronto).

· The first multi-touch system that I am aware of.

· Consisted of a frosted-glass panel whose local optical properties were such that when viewed behind with a camera a black spot whose size depended on finger pressure appeared on an otherwise white background. This with simple image processing allowed multi touch input picture drawing, etc. At the time we discussed the notion of a projector for defining the context both for the camera and the human viewer.

· Mehta, Nimish (1982), A Flexible Machine Interface, M.A.Sc. Thesis, Department of Electrical Engineering, University of Toronto supervised by Professor K.C. Smith.

1983: Soft Machines (Bell Labs, Murray Hill)

· This is the first paper that I am aware of in the user interface literature that attempts to provide a comprehensive discussion the properties of touch-screen based user interfaces, what they call “soft machines”.

· While not about multi-touch specifically, this paper outlined many of the attributes that make this class of system attractive for certain contexts and applications.

· Nakatani, L. H. & Rohrlich, John A. (1983). Soft Machines: A Philosophy of User-Computer Interface Design. Proceedings of the ACM Conference on Human Factors in Computing Systems (CHI’83), 12-15.

1983: Video Place / Video Desk (Myron Krueger)

· A vision based system that tracked the hands and enabled multiple fingers, hands, and people to interact using a rich set of gestures.

· Implemented in a number of configurations, including table and wall.

· Didn’t sense touch, per se, so largely relied on dwell time to trigger events intended by the pose.

· Essentially “wrote the book” in terms of unencumbered (i.e., no gloves, mice, styli, etc.) rich gestural interaction. Work that was more than a decade ahead of its time and hugely influential, yet not as acknowledged as it should be.

· Krueger, Myron, W. (1983). Artificial Reality. Reading, MA: Addison-Wesley.

· Krueger, Myron, W. (1991). Artificial Reality II. Reading, MA: Addison-Wesley.

· Krueger, Myron, W., Gionfriddo, Thomas., & Hinrichsen, Katrin (1985). VIDEOPLACE - An Artificial Reality, Proceedings of the ACM Conference on Human Factors in Computing Systems (CHI’85), 35 - 40.

Myron’s work had a staggeringly rich repertoire of gestures, muti-finger, multi-hand and multi-person interaction.

1984: Multi-Touch Screen (Bell Labs, Murray Hill NJ)

· A multi-touch touch screen, not tablet, integrated with a CRT on an interactive graphics terminal. Could manipulate graphical objects with fingers with excellent response time.

· Shown to me by Lloyd Nakatani (see above), who invited me to visit Bell Labs after seeing the presentation of our work at SIGCHI in 1985

· I believe that the one that I saw in 1985 may be the one patented by Leonard Kasday (US Patent 4484179) in 1984, which was multitouch; however my recollection is that the one that I saw was capacitive, not optical.

· This work is at least contemporary with our work in Toronto, and likely precedes it.

· Since their technology was transparent and faster than ours, my view was that they were ahead of us, so we stopped working on hardware (expecting that we would get access to theirs), and focus on the software and the interaction side, which was our strength.

· Around 1990 I took a group from Xerox to see it since we were considering using it for a photocopy interface.

1985: Multi-Touch Tablet (Input Research Group, University of Toronto): http://www.billbuxton.com/papers.html#anchor1439918

· Developed a touch tablet capable of sensing an arbitrary number of simultaneous touch inputs, reporting both location and degree of touch for each.

· To put things in historical perspective, this work was done in 1984, the same year the first Macintosh computer was introduced.

· Used capacitance, rather than optical sensing so was thinner and much simpler than camera-based systems.

· A Multi-Touch Three Dimensional Touch-Sensitive Tablet (1985). Videos at: http://www.billbuxton.com/buxtonIRGVideos.html

Issues and techniques in touch-sensitive tablet input.(1985). Videos at: http://www.billbuxton.com/buxtonIRGVideos.html

1986: Bi-Manual Input (University of Toronto)

In 1985 we did a study, published the following year, which examined the benefits of two different compound bi-manual tasks that involved continuous control with each hand

The first was a positioning/scaling task. That is, one had to move a shape to a particular location on the screen with one hand, while adjusting its size to match a particular target with the other.

The second was a selection/navigation task. That is, one had to navigate to a particular location in a document that was currently off-screen, with one hand, then select it with the other.

Since bi-manual continuous control was still not easy to do (the ADB had not yet been released - see below), we emulated the Macintosh with another computer, a PERQ.

The results demonstrated that such continuous bi-manual control was both easy for users, and resulted in significant improvements in performance and learning.

See Buxton, W. & Myers, B. (1986). A study in two-handed input. Proceedings of CHI '86, 321-326.[video]

Despite this capability being technologically and economically viable since 1986 (with the advent of the ADB - see below - and later USB), there are still no mainstream systems that take advantage of this basic capability. Too bad.

This is an example of techniques developed for multi-device and multi-hand that can easily transfer to multi-touch devices.

1986: Apple Desktop Bus (ADB) and the Trackball Scroller Init (Apple Computer / University of Toronto)

The Macintosh II and Macintosh SE were released with the Apple Desktop Bus. This can be thought of as an early version of the USB.

It supported plug-and-play, and also enabled multiple input devices (keyboards, trackballs, joysticks, mice, etc.) to be plugged into the same computer simultaneously.

The only downside was that if you plugged in two pointing devices, by default, the software did not distinguish them. They both did the same thing, and if a mouse and a trackball were operate at the same time (which they could be) a kind of tug-of-war resulted for the tracking symbol on the screen.

My group at the University of Toronto wanted to take advantage of this multi-device capability and contacted friends at Apple's Advanced Technology Group for help.

Due to the efforts of Gina Venolia and Michael Chen , they produced a simple "init" that could be dropped into the systems folder called the trackballscroller init.

It enabled the mouse, for example, to be designated the pointing device, and a trackball, for example, to control scrolling independently in X and Y. See, for example, Buxton, W. (1990). The Natural Language of Interaction: A Perspective on Non-Verbal Dialogues.In Laurel, B. (Ed.). The Art of Human-Computer Interface Design, Reading, MA: Addison-Wesley. 405-416.

They also provided another init that enabled us to grab the signals from the second device and use it to control a range of other functions. See fr example, Kabbash, P., Buxton, W.& Sellen, A. (1994). Two-Handed Input in a Compound Task. Proceedings of CHI '94, 417-423.

In short, with this technology, we were able to deliver the benefits demonstrated by Buxton & Myers (see above) on standard hardware, without changes to the operating system, and largely, with out changes even to the applications.

This is the closest that we came, without actually getting there, of supporting multi-point input - such as all of the two-point stretching, etc. that is getting so much attention now, 20 years later. It was technologically and economically viable then.

To our disappointment, Apple never took advantage of this - one of their most interesting - innovations.

1991: Bidirectional Displays (Bill Buxton & Colleagues , Xerox PARC)

· First discussions about the feasibility of making an LCD display that was also an input device, i.e., where pixels were input as well as output devices. Led to two initiatives. (Think of the paper-cup and string “walkie-talkies” that we all made as kids: the cups were bidirectional and functioned simultaneously as both a speaker and a microphone.)

· Took the high res 2D a-Si scanner technology used in our scanners and adding layers to make them displays. The bi-directional motivation got lost in the process, but the result was the dpix display (http://www.dpix.com/about.html);

· The Liveboard project. The rear projection Liveboard was initially conceived as a quick prototype of a large flat panel version that used a tiled array of bi-directional dpix displays.

1991: Digital Desk (Pierre Wellner, Rank Xerox EuroPARC, Cambridge)

· An early front projection tablet top system that used optical and acoustic techniques to sense both hands/fingers as well as certain objects, in particular, paper-based controls and data.

· Clearly demonstrated multi-touch concepts such as two finger scaling and translation of graphical objects, using either a pinching gesture or a finger from each hand, for example.

· A classic paper in the literature on augmented reality.

· Wellner, P. (1991). The DigitalDesk Calculator: Tactile manipulation on a desktop display. Proceedings of the Fourth Annual Symposium on User Interface Software and Technology (UIST '91), 27-33.

1992: Flip Keyboard (Bill Buxton, Xerox PARC): http://www.billbuxton.com/

· A multi-touch pad integrated into the bottom of a keyboard. You flip the keyboard to gain access to the multi-touch pad for rich gestural control of applications.

· Combined keyboard / touch tablet input device (1994). Video at: http://www.billbuxton.com/flip_keyboard_s.mov (video 2002 in conjunction with Tactex Controls)

Sound Synthesizer Audio MixerGraphics on multi-touch surface defining controls for various virtual devices.

1992: Simon (IBM & Bell South)

· IBM and Bell South release what was arguably the world's first smart phone, the Simon.

· What is of historical interest is that the Simon, like the iPhone, relied on a touch-screen driven “soft machine” user interface.

· While only a single-touch device, the Simon foreshadows a number of aspects of what we are seeing in some of the touch-driven mobile devices that we see today.

· Sidebar: my working Simon is one of the most prized pieces in my collection of input devices.

1992: Wacom (Japan)

In 1992 Wacom introduced their UD series of digitizing tablets. These were special in that they had mutli-device / multi-point sensing capability. They could sense the position of the stylus and tip pressure, as well as simultaneously sense the position of a mouse-like puck. This enabled bimanual input.

Working with Wacom, my lab at the University of Toronto developed a number of ways to exploit this technology to far beyond just the stylus and puck. See the work on Graspable/Tangible interfaces, below.

Their next two generations of tablets, the Intuos 1 (1998) and Intuos 2 (2001) series extended the multi-point capability. It enabled the sensing of the location of the stylus in x and y, plus tilt in x and tilt in y (making the stylus a location-sensitive joystick, in effect), tip pressure, and value from a side-mounted dial on their airbrush stylus. As well, one could simultaneously sense the position and rotation of the puck, as well as the rotation of a wheel on its side. In total, one was able to have control of 10 degrees of freedom using two hands.

While this may seem extravagant and hard to control, that all depended on how it was used. For example, all of these signals, coupled with bimanual input, are needed to implement any digital airbrush worthy of the name. With these technologies we were able to do just that with my group at AliasWavefront, again, with the cooperation of Wacom.

See also: Leganchuk, A., Zhai, S.& Buxton, W. (1998).Manual and Cognitive Benefits of Two-Handed Input: An Experimental Study.Transactions on Human-Computer Interaction, 5(4), 326-359.

1992: Starfire (Bruce Tognazinni , SUN Microsystems)

· Bruce Tognazinni produced an future envisionment film, Starfire, that included a number of multi-hand, multi-finger interactions, including pinching, etc.

1994-2002: Bimanual Research (AliasWavefront Toronto)

· Developed a number of innovative techniques for multi-point / multi-handed input for rich manipulation of graphics and other visually represented objects. Only some are mentioned specifically on this page.

· There are a number of videos can be seen which illustrate these techniques, along with others: http://www.billbuxton.com/buxtonAliasVideos.html

· Also see papers on two-handed input to see examples of multi-point manipulation of objects at: http://www.billbuxton.com/papers.html#anchor1442822

1995: Graspable/Tangible Interfaces (Input Research Group, University of Toronto)

· Demonstrated concept and later implementation of sensing the identity, location and even rotation of multiple physical devices on a digital desk-top display and using them to control graphical objects.

· By means of the resulting article and associated thesis introduced the notion of what has come to be known as “graspable” or “tangible” computing.

· Fitzmaurice, G.W., Ishii, H. & Buxton, W. (1995). Bricks: Laying the foundations for graspable user interfaces. Proceedings of the ACMSIGCHI Conference on Human Factors in Computing Systems (CHI'95), 442–449.

1995: DSI Datotech (Vancouver BC)

· In 1995 this company made a touch tablet, the HandGear, capable of multipoint sensing. They also developed a software package, Gesture Recognition Technology (GRT), for recognizing hand gestures captured with the tablet.

· The company went out of business around 2002

1995/97: Active Desk (Input Research Group / Ontario Telepresence Project, University of Toronto)

Around 1992 we made a drafting table size desk that had a rear-projection data display, where the rear projection screen/table top was a translucent stylus controlled digital graphics tablet (Scriptel). The stylus was operated with the dominant hand. Prior to 1995 we mounted a camera bove the table top. It tracked the position of the non-dominant hand on the tablet surface, as well as the pose (open angle) between the thumb and index finger. The non-dominant hand could grasp and manipulate objects based on what it was over and opening and closing the grip on the virtual object. This vision work was done by a student, Yuyan Liu.

Buxton, W. (1997). Living in Augmented Reality: Ubiquitous Media and Reactive Environments. In K. Finn, A. Sellen & S. Wilber (Eds.). Video Mediated Communication. Hillsdale, N.J.: Erlbaum, 363-384. An earlier version of this chapter also appears in Proceedings of Imagina '95, 215-229.

Simultaneous bimanual and multi-finger interaction on large interactive display surface

1997: T3 (AliasWavefront, Toronto)

T3 was a bimanual tablet-based system that utilized a number of techniques that work equally well on multi-touch devices, and have been used thus.

These include, but are not restricted to grabbing the drawing surface itself from two points and scaling its size (i.e., zooming in/out) by moving the hands apart or towards each other (respectively). Likewise the same could be done with individual graphical objects that lay on the background. (Note, this was simply a multi-point implementation of a concept seen in Ivan Sutherland’s Sketchpad system.)

Likewise, one could grab the background or an object and rotate it using two points, thereby controlling both the pivot point and degree of the rotation simultaneously. Ditto for translating (moving) the object or page.

Of interest is that one could combine these primitives, such as translate and scale, simultaneously (ideas foreshadowed by Fitzmaurice’s graspable interface work – above).

Kurtenbach, G., Fitzmaurice, G., Baudel, T. & Buxton, W. (1997). The design and evaluation of a GUI paradigm based on tabets, two-hands, and transparency. Proceedings of the 1997 ACM Conference on Human Factors in Computing Systems, CHI '97, 35-42. [video].

1997: The Haptic Lens (Mike Sinclair, Georgia Tech / Microsoft Research)

· The Haptic Lens, a multi-touch sensor that had the feel of clay, in that it deformed the harder you pushed, and resumed it basic form when released. A novel and very interesting approach to this class of device.

· Sinclair, Mike (1997). The Haptic Lens. ACM SIGGRAPH 97 Visual Proceedings: The art and interdisciplinary programs of SIGGRAPH '97, Page: 179

1998: Tactex Controls (Victoria BC) http://www.tactex.com/

· Kinotex controller developed in 1998 and shipped in Music Touch Controller, the MTC Express in 2000. Seen in video at: http://www.billbuxton.com/flip_keyboard_s.mov

~1998: Fingerworks (Newark, Delaware).

· Made a range of touch tablets with multi-touch sensing capabilities, including the iGesture Pad. They supported a fairly rich library of multi-point / multi-finger gestures.

· Founded by two University of Delaware academics, John Elias and Wayne Westerman

· Largely based on Westerman’s thesis: Westerman, Wayne (1999). Hand Tracking, Finger Identification, and Chordic Manipulation on a Multi-Touch Surface. U of Delaware PhD Dissertation.

· http://www.ee.udel.edu/~westerma/main.pdf

· Company is now out of business.

· Assets, including Elias and Westerman acquired by Apple.

· However, documentation, including tutorials and manuals are still downloadable from: http://www.fingerworks.com/downloads.html

1999: Portfolio Wall (AliasWavefront, Toronto On, Canada)

· A product that was a digital cork-board on which images could be presented as a group or individually. Allowed images to be sorted, annotated, and presented in sequence.

· Due to available sensor technology, did not us multi-touch. However, its interface was entirely based on finger touch gestures that went well beyond typical touch screen interfaces. For example, to advance to the next slide in a sequence or start a video, on flicked to the right. To stop a video, one flicked down. To go back to the previous image, one flicked left.

· The gestures were much richer than these examples. They were self-revealing, could be done eyes free, and leveraged previous work on “marking menus.”

· See a number of demos at: http://www.billbuxton.com/buxtonAliasVideos.html

Touch to open/close image Flick right = next / Flick left = previous

Portfolio Wall (1999)

2001: Diamond Touch (Mitsubishi Research Labs, Cambridge MA) http://www.merl.com/

· example capable of distinguishing which person's fingers/hands are which, as well as location and pressure

· various gestures and rich gestures.

· http://www.diamondspace.merl.com/

2002: Jun Rekimoto Sony Computer Science Laboratories (Tokyo) http://www.csl.sony.co.jp/person/rekimoto/smartskin/

· SmartSkin: an architecture for making interactive surfaces that are sensitive to human hand and finger gestures. This sensor recognizes multiple hand positions and their shapes as well as calculates the distances between the hands and the surface by using capacitive sensing and a mesh-shaped antenna. In contrast to camera-based gesture recognition systems, all sensing elements can be integrated within the surface, and this method does not suffer from lighting and occlusion problems.

§ Jun Rekimoto (2002). SmartSkin: An Infrastructure for Freehand Manipulation on Interactive Surfaces, Proceedings of ACM SIGCHI.

§ Kentaro Fukuchi and Jun Rekimoto, Interaction Techniques for SmartSkin, ACM UIST2002 demonstration, 2002.

§ SmartSkin demo at Entertainment Computing 2003 (ZDNet Japan)

§ Video demos available at website, above.

2002: Andrew Fentem (UK) http://www.andrewfentem.com/

States that he has been working on multi-touch for music and general applications since 2002

However, appears not to have published any technical information or details on this work in the technical or scientific literature.

Hence, the work from this period is not generally known, and - given the absence of publications - has not been cited.

Therefore it has had little impact on the larger evolution of the field.

This is one example where I am citing work that I have not known and loved for the simple reason that it took place below the radar of normal scientific and technical exchange.

I am sure that there are several similar instances of this. Hence I include this as an example representing the general case.

2003: University of Toronto (Toronto)

· paper outlinging a number of techniques for multi-finger, multi-hand, and multi-user on a single interactive touch display surface.

· Many simpler and previously used techniques are omitted since they were known and obvious.

· Mike Wu, Mike & Balakrishnan, Ravin (2003). Multi-Finger and Whole Hand Gestural Interaction Techniques for Multi-User Tabletop Displays. CHI Letters

Freeform rotation. (a) Two fingers are used to rotate an object. (b) Though the pivot finger is lifted, the second finger can continue the rotation.

This parameter adjustment widget allows two-fingered manipulation.

2003: Jazz Mutant (Bordeaux France) http://www.jazzmutant.com/

· Make one of the first transparent multi-touch, one that became - to the best of my knowledge – the first to be offered in a commercial product.

· The product for which the technology was used was the Lemur, a music controller with a true multi-touch screen interface.

· An early version of the Lemur was first shown in public in LA in August of 2004.

2004: TouchLight (Andy Wilson, Microsoft Research): http://research.microsoft.com/~awilson/

· TouchLight (2004). A touch screen display system employing a rear projection display and digital image processing that transforms an otherwise normal sheet of acrylic plastic into a high bandwidth input/output surface suitable for gesture-based interaction. Video demonstration on website.

· Capable of sensing multiple fingers and hands, of one or more users.

· Since the acrylic sheet is transparent, the cameras behind have the potential to be used to scan and display paper documents that are held up against the screen .

2005: Blaskó and Steven Feiner (Columbia University): http://www1.cs.columbia.edu/~gblasko/

· Using pressure to access virtual devices accessible below top layer devices

· Gábor Blaskó and Steven Feiner (2004). Single-Handed Interaction Techniques for Multiple Pressure-Sensitive Strips, Proc. ACM Conference on Human Factors in Computing Systems (CHI 2004) Extended Abstracts, 1461-1464

2005: PlayAnywhere (Andy Wilson, Microsoft Research): http://research.microsoft.com/~awilson/

· PlayAnywhere (2005). Video on website

· Contribution: sensing and identifying of objects as well as touch.

· A front-projected computer vision-based interactive table system.

· Addresses installation, calibration, and portability issues that are typical of most vision-based table systems.

· Uses an improved shadow-based touch detection algorithm for sensing both fingers and hands, as well as objects.

· Object can be identified and tracked using a fast, simple visual bar code scheme. Hence, in addition to manual mult-touch, the desk supports interaction using various physical objects, thereby also supporting graspable/tangible style interfaces.

· It can also sense particular objects, such as a piece of paper or a mobile phone, and deliver appropriate and desired functionality depending on which..

2005: Jeff Han (NYU): http://www.cs.nyu.edu/~jhan/

· Very elegant implementation of a number of techniques and applications on a table format rear projection surface.

· Multi-Touch Sensing through Frustrated Total Internal Reflection (2005). Video on website.

· See also the more recent video at: http://fastcompany.com/video/general/perceptivepixel.html

2005: Tactiva (Palo Alto) http://www.tactiva.com/

· Have announced and shown video demos of a product called the TactaPad.

· It uses optics to capture hand shadows and superimpose on computer screen, providing a kind of immersive experience, that echoes back to Krueger (see above)

· Is multi-hand and multi-touch

· Is tactile touch tablet, i.e., the tablet surface feels different depending on what virtual object/control you are touching

2005: Toshiba Matsusita Display Technology (Tokyo)

· Announce and demonstrate LCD display with “Finger Shadow Sensing Input” capability

· One of the first examples of what I referred to above in the 1991 Xerox PARC discussions. It will not be the last.

· The significance is that there is no separate touch sensing transducer. Just as there are RGB pixels that can produce light at any location on the screen, so can pixels detect shadows at any location on the screen, thereby enabling multi-touch in a way that is hard for any separate touch technology to match in performance or, eventually, in price.

· http://www3.toshiba.co.jp/tm_dsp/press/2005/05-09-29.htm

2005: Tomer Moscovich & collaborators (Brown University)

· a number of papers on web site: http://www.cs.brown.edu/people/tm/

· T. Moscovich, T. Igarashi, J. Rekimoto, K. Fukuchi, J. F. Hughes. "A Multi-finger Interface for Performance Animation of Deformable Drawings." Demonstration at UIST 2005 Symposium on User Interface Software and Technology, Seattle, WA, October 2005. (video)

2006: Benko & collaborators (Columbia University & Microsoft Research)

· Some techniques for precise pointing and selection on muti-touch screens

· Benko, H., Wilson, A. D., and Baudisch, P. (2006). Precise Selection Techniques for Multi-Touch Screens. Proc. ACM CHI 2006 (CHI'06: Human Factors in Computing Systems, 1263–1272

· video

2006: Plastic Logic (Cambridge UK)

A flexible e-ink display mounted over a multi-point touch pad, thereby creating an interactive multi-touch display.

Demonstrated in public at the Trade Show of the 2006 SID conference, San Francisco.

2006: Synaptics & Pilotfish (San Jose) http://www.synaptics.com/

· Jointly developed Onyx, a soft multi-touch mobile phone concept using transparent Synaptics touch sensor. Can sense difference of size of contact. Hence, the difference between finger (small) and cheek (large), so you can answer the phone just by holding to cheek, for example.

· http://www.synaptics.com/onyx/

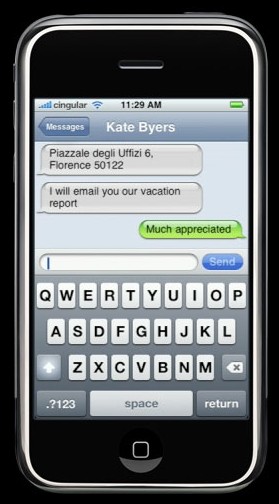

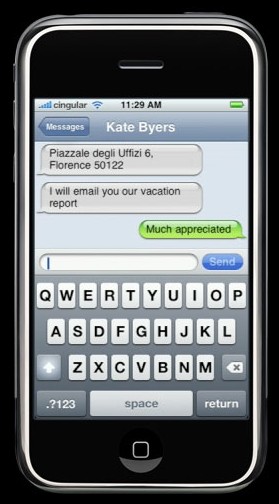

2007: Apple iPhone http://www.apple.com/iphone/technology/

· like the Simon (see above) a mobile phone with a soft touch-based interface

· Has multi-touch capability

· uses it, for example, to support the well-known technique of "pinching", i.e., using the thumb and index finger of one hand to articulate a pinching gesture on a map or photo to zoom in or out of a map or photo.

· Works especially well with web pages in the browser

· Strangely, does not enable use of multi-touch to hold shift key with one finger in order to type an upper case character with another with the soft virtual keyboard.

2007: Microsoft Surface Computing http://www.surface.com/

· Interactive table surface

· Capable of sensing multiple fingers and hands

· Capable of identifying various objects and their position on the surface

· Commercial manifestation of internal research begun in 2001 by Andy Wilson (see above) and Steve Bathiche

· A key indication of this technology making the transition from research, development and demo to mainstream commercial applications.

The history of Multi-touch:

http://www.billbuxton.com/multitouchOverview.html

An Incomplete Roughly Annotated Chronology of Multi-Touch and Related Work

In the beginning ....: Typing & N-Key Rollover (IBM and others).

While it may seem a long way from multi-touch screens, the story of multi-touch starts with keyboards.

Yes they are mechanical devices, "hard" rather than "soft" machines. But they do involve multi-touch of a sort.

First, most obviously, we see sequences, such as the SHIFT, Control, Fn or ALT keys in combination with others. These are cases where we want multi-touch.

Second, there are the cases of unintentional, but inevitable, multiple simultaneous key presses which we want to make proper sense of, the so-called question of n-key rollover (where you push the next key before releasing the previous one).

Photo Credit

1982: Flexible Machine Interface (Nimish Mehta , University of Toronto).

· The first multi-touch system that I am aware of.

· Consisted of a frosted-glass panel whose local optical properties were such that when viewed behind with a camera a black spot whose size depended on finger pressure appeared on an otherwise white background. This with simple image processing allowed multi touch input picture drawing, etc. At the time we discussed the notion of a projector for defining the context both for the camera and the human viewer.

· Mehta, Nimish (1982), A Flexible Machine Interface, M.A.Sc. Thesis, Department of Electrical Engineering, University of Toronto supervised by Professor K.C. Smith.

1983: Soft Machines (Bell Labs, Murray Hill)

· This is the first paper that I am aware of in the user interface literature that attempts to provide a comprehensive discussion the properties of touch-screen based user interfaces, what they call “soft machines”.

· While not about multi-touch specifically, this paper outlined many of the attributes that make this class of system attractive for certain contexts and applications.

· Nakatani, L. H. & Rohrlich, John A. (1983). Soft Machines: A Philosophy of User-Computer Interface Design. Proceedings of the ACM Conference on Human Factors in Computing Systems (CHI’83), 12-15.

1983: Video Place / Video Desk (Myron Krueger)

· A vision based system that tracked the hands and enabled multiple fingers, hands, and people to interact using a rich set of gestures.

· Implemented in a number of configurations, including table and wall.

· Didn’t sense touch, per se, so largely relied on dwell time to trigger events intended by the pose.

· Essentially “wrote the book” in terms of unencumbered (i.e., no gloves, mice, styli, etc.) rich gestural interaction. Work that was more than a decade ahead of its time and hugely influential, yet not as acknowledged as it should be.

· Krueger, Myron, W. (1983). Artificial Reality. Reading, MA: Addison-Wesley.

· Krueger, Myron, W. (1991). Artificial Reality II. Reading, MA: Addison-Wesley.

· Krueger, Myron, W., Gionfriddo, Thomas., & Hinrichsen, Katrin (1985). VIDEOPLACE - An Artificial Reality, Proceedings of the ACM Conference on Human Factors in Computing Systems (CHI’85), 35 - 40.

Myron’s work had a staggeringly rich repertoire of gestures, muti-finger, multi-hand and multi-person interaction.

1984: Multi-Touch Screen (Bell Labs, Murray Hill NJ)

· A multi-touch touch screen, not tablet, integrated with a CRT on an interactive graphics terminal. Could manipulate graphical objects with fingers with excellent response time.

· Shown to me by Lloyd Nakatani (see above), who invited me to visit Bell Labs after seeing the presentation of our work at SIGCHI in 1985

· I believe that the one that I saw in 1985 may be the one patented by Leonard Kasday (US Patent 4484179) in 1984, which was multitouch; however my recollection is that the one that I saw was capacitive, not optical.

· This work is at least contemporary with our work in Toronto, and likely precedes it.

· Since their technology was transparent and faster than ours, my view was that they were ahead of us, so we stopped working on hardware (expecting that we would get access to theirs), and focus on the software and the interaction side, which was our strength.

· Around 1990 I took a group from Xerox to see it since we were considering using it for a photocopy interface.

1985: Multi-Touch Tablet (Input Research Group, University of Toronto): http://www.billbuxton.com/papers.html#anchor1439918

· Developed a touch tablet capable of sensing an arbitrary number of simultaneous touch inputs, reporting both location and degree of touch for each.

· To put things in historical perspective, this work was done in 1984, the same year the first Macintosh computer was introduced.

· Used capacitance, rather than optical sensing so was thinner and much simpler than camera-based systems.

· A Multi-Touch Three Dimensional Touch-Sensitive Tablet (1985). Videos at: http://www.billbuxton.com/buxtonIRGVideos.html

Issues and techniques in touch-sensitive tablet input.(1985). Videos at: http://www.billbuxton.com/buxtonIRGVideos.html

1986: Bi-Manual Input (University of Toronto)

In 1985 we did a study, published the following year, which examined the benefits of two different compound bi-manual tasks that involved continuous control with each hand

The first was a positioning/scaling task. That is, one had to move a shape to a particular location on the screen with one hand, while adjusting its size to match a particular target with the other.

The second was a selection/navigation task. That is, one had to navigate to a particular location in a document that was currently off-screen, with one hand, then select it with the other.

Since bi-manual continuous control was still not easy to do (the ADB had not yet been released - see below), we emulated the Macintosh with another computer, a PERQ.

The results demonstrated that such continuous bi-manual control was both easy for users, and resulted in significant improvements in performance and learning.

See Buxton, W. & Myers, B. (1986). A study in two-handed input. Proceedings of CHI '86, 321-326.[video]

Despite this capability being technologically and economically viable since 1986 (with the advent of the ADB - see below - and later USB), there are still no mainstream systems that take advantage of this basic capability. Too bad.

This is an example of techniques developed for multi-device and multi-hand that can easily transfer to multi-touch devices.

1986: Apple Desktop Bus (ADB) and the Trackball Scroller Init (Apple Computer / University of Toronto)

The Macintosh II and Macintosh SE were released with the Apple Desktop Bus. This can be thought of as an early version of the USB.

It supported plug-and-play, and also enabled multiple input devices (keyboards, trackballs, joysticks, mice, etc.) to be plugged into the same computer simultaneously.

The only downside was that if you plugged in two pointing devices, by default, the software did not distinguish them. They both did the same thing, and if a mouse and a trackball were operate at the same time (which they could be) a kind of tug-of-war resulted for the tracking symbol on the screen.

My group at the University of Toronto wanted to take advantage of this multi-device capability and contacted friends at Apple's Advanced Technology Group for help.

Due to the efforts of Gina Venolia and Michael Chen , they produced a simple "init" that could be dropped into the systems folder called the trackballscroller init.

It enabled the mouse, for example, to be designated the pointing device, and a trackball, for example, to control scrolling independently in X and Y. See, for example, Buxton, W. (1990). The Natural Language of Interaction: A Perspective on Non-Verbal Dialogues.In Laurel, B. (Ed.). The Art of Human-Computer Interface Design, Reading, MA: Addison-Wesley. 405-416.

They also provided another init that enabled us to grab the signals from the second device and use it to control a range of other functions. See fr example, Kabbash, P., Buxton, W.& Sellen, A. (1994). Two-Handed Input in a Compound Task. Proceedings of CHI '94, 417-423.

In short, with this technology, we were able to deliver the benefits demonstrated by Buxton & Myers (see above) on standard hardware, without changes to the operating system, and largely, with out changes even to the applications.

This is the closest that we came, without actually getting there, of supporting multi-point input - such as all of the two-point stretching, etc. that is getting so much attention now, 20 years later. It was technologically and economically viable then.

To our disappointment, Apple never took advantage of this - one of their most interesting - innovations.

1991: Bidirectional Displays (Bill Buxton & Colleagues , Xerox PARC)

· First discussions about the feasibility of making an LCD display that was also an input device, i.e., where pixels were input as well as output devices. Led to two initiatives. (Think of the paper-cup and string “walkie-talkies” that we all made as kids: the cups were bidirectional and functioned simultaneously as both a speaker and a microphone.)

· Took the high res 2D a-Si scanner technology used in our scanners and adding layers to make them displays. The bi-directional motivation got lost in the process, but the result was the dpix display (http://www.dpix.com/about.html);

· The Liveboard project. The rear projection Liveboard was initially conceived as a quick prototype of a large flat panel version that used a tiled array of bi-directional dpix displays.

1991: Digital Desk (Pierre Wellner, Rank Xerox EuroPARC, Cambridge)

· An early front projection tablet top system that used optical and acoustic techniques to sense both hands/fingers as well as certain objects, in particular, paper-based controls and data.

· Clearly demonstrated multi-touch concepts such as two finger scaling and translation of graphical objects, using either a pinching gesture or a finger from each hand, for example.

· A classic paper in the literature on augmented reality.

· Wellner, P. (1991). The DigitalDesk Calculator: Tactile manipulation on a desktop display. Proceedings of the Fourth Annual Symposium on User Interface Software and Technology (UIST '91), 27-33.

1992: Flip Keyboard (Bill Buxton, Xerox PARC): http://www.billbuxton.com/

· A multi-touch pad integrated into the bottom of a keyboard. You flip the keyboard to gain access to the multi-touch pad for rich gestural control of applications.

· Combined keyboard / touch tablet input device (1994). Video at: http://www.billbuxton.com/flip_keyboard_s.mov (video 2002 in conjunction with Tactex Controls)

Sound Synthesizer Audio MixerGraphics on multi-touch surface defining controls for various virtual devices.

1992: Simon (IBM & Bell South)

· IBM and Bell South release what was arguably the world's first smart phone, the Simon.

· What is of historical interest is that the Simon, like the iPhone, relied on a touch-screen driven “soft machine” user interface.

· While only a single-touch device, the Simon foreshadows a number of aspects of what we are seeing in some of the touch-driven mobile devices that we see today.

· Sidebar: my working Simon is one of the most prized pieces in my collection of input devices.

1992: Wacom (Japan)

In 1992 Wacom introduced their UD series of digitizing tablets. These were special in that they had mutli-device / multi-point sensing capability. They could sense the position of the stylus and tip pressure, as well as simultaneously sense the position of a mouse-like puck. This enabled bimanual input.

Working with Wacom, my lab at the University of Toronto developed a number of ways to exploit this technology to far beyond just the stylus and puck. See the work on Graspable/Tangible interfaces, below.

Their next two generations of tablets, the Intuos 1 (1998) and Intuos 2 (2001) series extended the multi-point capability. It enabled the sensing of the location of the stylus in x and y, plus tilt in x and tilt in y (making the stylus a location-sensitive joystick, in effect), tip pressure, and value from a side-mounted dial on their airbrush stylus. As well, one could simultaneously sense the position and rotation of the puck, as well as the rotation of a wheel on its side. In total, one was able to have control of 10 degrees of freedom using two hands.

While this may seem extravagant and hard to control, that all depended on how it was used. For example, all of these signals, coupled with bimanual input, are needed to implement any digital airbrush worthy of the name. With these technologies we were able to do just that with my group at AliasWavefront, again, with the cooperation of Wacom.

See also: Leganchuk, A., Zhai, S.& Buxton, W. (1998).Manual and Cognitive Benefits of Two-Handed Input: An Experimental Study.Transactions on Human-Computer Interaction, 5(4), 326-359.

1992: Starfire (Bruce Tognazinni , SUN Microsystems)

· Bruce Tognazinni produced an future envisionment film, Starfire, that included a number of multi-hand, multi-finger interactions, including pinching, etc.

1994-2002: Bimanual Research (AliasWavefront Toronto)

· Developed a number of innovative techniques for multi-point / multi-handed input for rich manipulation of graphics and other visually represented objects. Only some are mentioned specifically on this page.

· There are a number of videos can be seen which illustrate these techniques, along with others: http://www.billbuxton.com/buxtonAliasVideos.html

· Also see papers on two-handed input to see examples of multi-point manipulation of objects at: http://www.billbuxton.com/papers.html#anchor1442822

1995: Graspable/Tangible Interfaces (Input Research Group, University of Toronto)

· Demonstrated concept and later implementation of sensing the identity, location and even rotation of multiple physical devices on a digital desk-top display and using them to control graphical objects.

· By means of the resulting article and associated thesis introduced the notion of what has come to be known as “graspable” or “tangible” computing.

· Fitzmaurice, G.W., Ishii, H. & Buxton, W. (1995). Bricks: Laying the foundations for graspable user interfaces. Proceedings of the ACMSIGCHI Conference on Human Factors in Computing Systems (CHI'95), 442–449.

1995: DSI Datotech (Vancouver BC)

· In 1995 this company made a touch tablet, the HandGear, capable of multipoint sensing. They also developed a software package, Gesture Recognition Technology (GRT), for recognizing hand gestures captured with the tablet.

· The company went out of business around 2002

1995/97: Active Desk (Input Research Group / Ontario Telepresence Project, University of Toronto)

Around 1992 we made a drafting table size desk that had a rear-projection data display, where the rear projection screen/table top was a translucent stylus controlled digital graphics tablet (Scriptel). The stylus was operated with the dominant hand. Prior to 1995 we mounted a camera bove the table top. It tracked the position of the non-dominant hand on the tablet surface, as well as the pose (open angle) between the thumb and index finger. The non-dominant hand could grasp and manipulate objects based on what it was over and opening and closing the grip on the virtual object. This vision work was done by a student, Yuyan Liu.

Buxton, W. (1997). Living in Augmented Reality: Ubiquitous Media and Reactive Environments. In K. Finn, A. Sellen & S. Wilber (Eds.). Video Mediated Communication. Hillsdale, N.J.: Erlbaum, 363-384. An earlier version of this chapter also appears in Proceedings of Imagina '95, 215-229.

Simultaneous bimanual and multi-finger interaction on large interactive display surface

1997: T3 (AliasWavefront, Toronto)

T3 was a bimanual tablet-based system that utilized a number of techniques that work equally well on multi-touch devices, and have been used thus.

These include, but are not restricted to grabbing the drawing surface itself from two points and scaling its size (i.e., zooming in/out) by moving the hands apart or towards each other (respectively). Likewise the same could be done with individual graphical objects that lay on the background. (Note, this was simply a multi-point implementation of a concept seen in Ivan Sutherland’s Sketchpad system.)

Likewise, one could grab the background or an object and rotate it using two points, thereby controlling both the pivot point and degree of the rotation simultaneously. Ditto for translating (moving) the object or page.

Of interest is that one could combine these primitives, such as translate and scale, simultaneously (ideas foreshadowed by Fitzmaurice’s graspable interface work – above).

Kurtenbach, G., Fitzmaurice, G., Baudel, T. & Buxton, W. (1997). The design and evaluation of a GUI paradigm based on tabets, two-hands, and transparency. Proceedings of the 1997 ACM Conference on Human Factors in Computing Systems, CHI '97, 35-42. [video].

1997: The Haptic Lens (Mike Sinclair, Georgia Tech / Microsoft Research)

· The Haptic Lens, a multi-touch sensor that had the feel of clay, in that it deformed the harder you pushed, and resumed it basic form when released. A novel and very interesting approach to this class of device.

· Sinclair, Mike (1997). The Haptic Lens. ACM SIGGRAPH 97 Visual Proceedings: The art and interdisciplinary programs of SIGGRAPH '97, Page: 179

1998: Tactex Controls (Victoria BC) http://www.tactex.com/

· Kinotex controller developed in 1998 and shipped in Music Touch Controller, the MTC Express in 2000. Seen in video at: http://www.billbuxton.com/flip_keyboard_s.mov

~1998: Fingerworks (Newark, Delaware).

· Made a range of touch tablets with multi-touch sensing capabilities, including the iGesture Pad. They supported a fairly rich library of multi-point / multi-finger gestures.

· Founded by two University of Delaware academics, John Elias and Wayne Westerman

· Largely based on Westerman’s thesis: Westerman, Wayne (1999). Hand Tracking, Finger Identification, and Chordic Manipulation on a Multi-Touch Surface. U of Delaware PhD Dissertation.

· http://www.ee.udel.edu/~westerma/main.pdf

· Company is now out of business.

· Assets, including Elias and Westerman acquired by Apple.

· However, documentation, including tutorials and manuals are still downloadable from: http://www.fingerworks.com/downloads.html

1999: Portfolio Wall (AliasWavefront, Toronto On, Canada)

· A product that was a digital cork-board on which images could be presented as a group or individually. Allowed images to be sorted, annotated, and presented in sequence.

· Due to available sensor technology, did not us multi-touch. However, its interface was entirely based on finger touch gestures that went well beyond typical touch screen interfaces. For example, to advance to the next slide in a sequence or start a video, on flicked to the right. To stop a video, one flicked down. To go back to the previous image, one flicked left.

· The gestures were much richer than these examples. They were self-revealing, could be done eyes free, and leveraged previous work on “marking menus.”

· See a number of demos at: http://www.billbuxton.com/buxtonAliasVideos.html

Touch to open/close image Flick right = next / Flick left = previous

Portfolio Wall (1999)

2001: Diamond Touch (Mitsubishi Research Labs, Cambridge MA) http://www.merl.com/

· example capable of distinguishing which person's fingers/hands are which, as well as location and pressure

· various gestures and rich gestures.

· http://www.diamondspace.merl.com/

2002: Jun Rekimoto Sony Computer Science Laboratories (Tokyo) http://www.csl.sony.co.jp/person/rekimoto/smartskin/

· SmartSkin: an architecture for making interactive surfaces that are sensitive to human hand and finger gestures. This sensor recognizes multiple hand positions and their shapes as well as calculates the distances between the hands and the surface by using capacitive sensing and a mesh-shaped antenna. In contrast to camera-based gesture recognition systems, all sensing elements can be integrated within the surface, and this method does not suffer from lighting and occlusion problems.

§ Jun Rekimoto (2002). SmartSkin: An Infrastructure for Freehand Manipulation on Interactive Surfaces, Proceedings of ACM SIGCHI.

§ Kentaro Fukuchi and Jun Rekimoto, Interaction Techniques for SmartSkin, ACM UIST2002 demonstration, 2002.

§ SmartSkin demo at Entertainment Computing 2003 (ZDNet Japan)

§ Video demos available at website, above.

2002: Andrew Fentem (UK) http://www.andrewfentem.com/

States that he has been working on multi-touch for music and general applications since 2002

However, appears not to have published any technical information or details on this work in the technical or scientific literature.

Hence, the work from this period is not generally known, and - given the absence of publications - has not been cited.

Therefore it has had little impact on the larger evolution of the field.

This is one example where I am citing work that I have not known and loved for the simple reason that it took place below the radar of normal scientific and technical exchange.

I am sure that there are several similar instances of this. Hence I include this as an example representing the general case.

2003: University of Toronto (Toronto)

· paper outlinging a number of techniques for multi-finger, multi-hand, and multi-user on a single interactive touch display surface.

· Many simpler and previously used techniques are omitted since they were known and obvious.

· Mike Wu, Mike & Balakrishnan, Ravin (2003). Multi-Finger and Whole Hand Gestural Interaction Techniques for Multi-User Tabletop Displays. CHI Letters

Freeform rotation. (a) Two fingers are used to rotate an object. (b) Though the pivot finger is lifted, the second finger can continue the rotation.

This parameter adjustment widget allows two-fingered manipulation.

2003: Jazz Mutant (Bordeaux France) http://www.jazzmutant.com/

· Make one of the first transparent multi-touch, one that became - to the best of my knowledge – the first to be offered in a commercial product.

· The product for which the technology was used was the Lemur, a music controller with a true multi-touch screen interface.

· An early version of the Lemur was first shown in public in LA in August of 2004.

2004: TouchLight (Andy Wilson, Microsoft Research): http://research.microsoft.com/~awilson/

· TouchLight (2004). A touch screen display system employing a rear projection display and digital image processing that transforms an otherwise normal sheet of acrylic plastic into a high bandwidth input/output surface suitable for gesture-based interaction. Video demonstration on website.

· Capable of sensing multiple fingers and hands, of one or more users.

· Since the acrylic sheet is transparent, the cameras behind have the potential to be used to scan and display paper documents that are held up against the screen .

2005: Blaskó and Steven Feiner (Columbia University): http://www1.cs.columbia.edu/~gblasko/

· Using pressure to access virtual devices accessible below top layer devices

· Gábor Blaskó and Steven Feiner (2004). Single-Handed Interaction Techniques for Multiple Pressure-Sensitive Strips, Proc. ACM Conference on Human Factors in Computing Systems (CHI 2004) Extended Abstracts, 1461-1464

2005: PlayAnywhere (Andy Wilson, Microsoft Research): http://research.microsoft.com/~awilson/

· PlayAnywhere (2005). Video on website

· Contribution: sensing and identifying of objects as well as touch.

· A front-projected computer vision-based interactive table system.

· Addresses installation, calibration, and portability issues that are typical of most vision-based table systems.

· Uses an improved shadow-based touch detection algorithm for sensing both fingers and hands, as well as objects.

· Object can be identified and tracked using a fast, simple visual bar code scheme. Hence, in addition to manual mult-touch, the desk supports interaction using various physical objects, thereby also supporting graspable/tangible style interfaces.

· It can also sense particular objects, such as a piece of paper or a mobile phone, and deliver appropriate and desired functionality depending on which..

2005: Jeff Han (NYU): http://www.cs.nyu.edu/~jhan/

· Very elegant implementation of a number of techniques and applications on a table format rear projection surface.

· Multi-Touch Sensing through Frustrated Total Internal Reflection (2005). Video on website.

· See also the more recent video at: http://fastcompany.com/video/general/perceptivepixel.html

2005: Tactiva (Palo Alto) http://www.tactiva.com/

· Have announced and shown video demos of a product called the TactaPad.

· It uses optics to capture hand shadows and superimpose on computer screen, providing a kind of immersive experience, that echoes back to Krueger (see above)

· Is multi-hand and multi-touch

· Is tactile touch tablet, i.e., the tablet surface feels different depending on what virtual object/control you are touching

2005: Toshiba Matsusita Display Technology (Tokyo)

· Announce and demonstrate LCD display with “Finger Shadow Sensing Input” capability

· One of the first examples of what I referred to above in the 1991 Xerox PARC discussions. It will not be the last.

· The significance is that there is no separate touch sensing transducer. Just as there are RGB pixels that can produce light at any location on the screen, so can pixels detect shadows at any location on the screen, thereby enabling multi-touch in a way that is hard for any separate touch technology to match in performance or, eventually, in price.

· http://www3.toshiba.co.jp/tm_dsp/press/2005/05-09-29.htm

2005: Tomer Moscovich & collaborators (Brown University)

· a number of papers on web site: http://www.cs.brown.edu/people/tm/

· T. Moscovich, T. Igarashi, J. Rekimoto, K. Fukuchi, J. F. Hughes. "A Multi-finger Interface for Performance Animation of Deformable Drawings." Demonstration at UIST 2005 Symposium on User Interface Software and Technology, Seattle, WA, October 2005. (video)

2006: Benko & collaborators (Columbia University & Microsoft Research)

· Some techniques for precise pointing and selection on muti-touch screens

· Benko, H., Wilson, A. D., and Baudisch, P. (2006). Precise Selection Techniques for Multi-Touch Screens. Proc. ACM CHI 2006 (CHI'06: Human Factors in Computing Systems, 1263–1272

· video

2006: Plastic Logic (Cambridge UK)

A flexible e-ink display mounted over a multi-point touch pad, thereby creating an interactive multi-touch display.

Demonstrated in public at the Trade Show of the 2006 SID conference, San Francisco.

2006: Synaptics & Pilotfish (San Jose) http://www.synaptics.com/

· Jointly developed Onyx, a soft multi-touch mobile phone concept using transparent Synaptics touch sensor. Can sense difference of size of contact. Hence, the difference between finger (small) and cheek (large), so you can answer the phone just by holding to cheek, for example.

· http://www.synaptics.com/onyx/

2007: Apple iPhone http://www.apple.com/iphone/technology/

· like the Simon (see above) a mobile phone with a soft touch-based interface

· Has multi-touch capability

· uses it, for example, to support the well-known technique of "pinching", i.e., using the thumb and index finger of one hand to articulate a pinching gesture on a map or photo to zoom in or out of a map or photo.

· Works especially well with web pages in the browser

· Strangely, does not enable use of multi-touch to hold shift key with one finger in order to type an upper case character with another with the soft virtual keyboard.

2007: Microsoft Surface Computing http://www.surface.com/

· Interactive table surface

· Capable of sensing multiple fingers and hands

· Capable of identifying various objects and their position on the surface

· Commercial manifestation of internal research begun in 2001 by Andy Wilson (see above) and Steve Bathiche

· A key indication of this technology making the transition from research, development and demo to mainstream commercial applications.

Labels:

Technology - Devices,

Technology - Touch

Subscribe to:

Comments (Atom)